[ad_1]

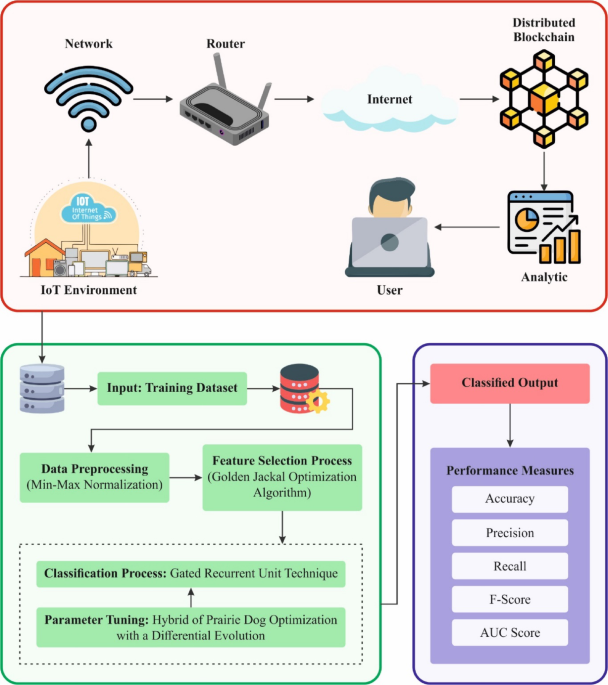

This study develops and designs an LBCCD-GJO method for IoT. The proposed method’s major intention is to identify and classify cybersecurity in the blockchain-assisted IoT environment. To accomplish this, the proposed LBCCD-GJO model involves various stages, such as data pre-processing, feature extraction, classification, and a hyper-parameter tuning method. Figure 1 signifies the complete workflow of the LBCCD-GJO model.

Overall workflow of the LBCCD-GJO model.

Stage 1: Data pre-processing

The proposed LBCCD-GJO technique initially applies data pre-processing using min–max normalization to convert input data into a beneficial format31. This model is chosen as it effectually scales all features within a defined range, typically between 0 and 1, ensuring that no feature dominates others due to differences in magnitude. This normalization technique assists in improving the convergence speed during model training and enhances the stability of the learning algorithm. Compared to other methods, such as Z-score normalization, min–max normalization is less sensitive to outliers, making it specifically beneficial when working with data that may contain extreme values. Moreover, it preserves the original distribution of the data, which can be critical for models that depend on the relationships between features. By utilizing min–max normalization, the model becomes more effectual and accurate in processing the input data, allowing for enhanced generalization and performance in real-world applications. This approach is particularly valuable when data values must be uniformly scaled to facilitate learning complex patterns.

Min–max normalization is an extensively utilized data pre-processing method that scales feature values within a particular variety [0, 1]. In the context of blockchain cyber security for IoT framework, this approach secures data from several IoT gadgets, often allowing diverse scales and measurement units to be consistently normalized. This enhances the accuracy and efficiency of models, like those utilized for optimization processes or anomaly detection. Min–max normalization minimizes biases that are the reason for large-scale variations and assists the blockchain-based method in processing IoT data more efficiently, guaranteeing optimized and secure operations.

Stage 2: GJO-based feature selection

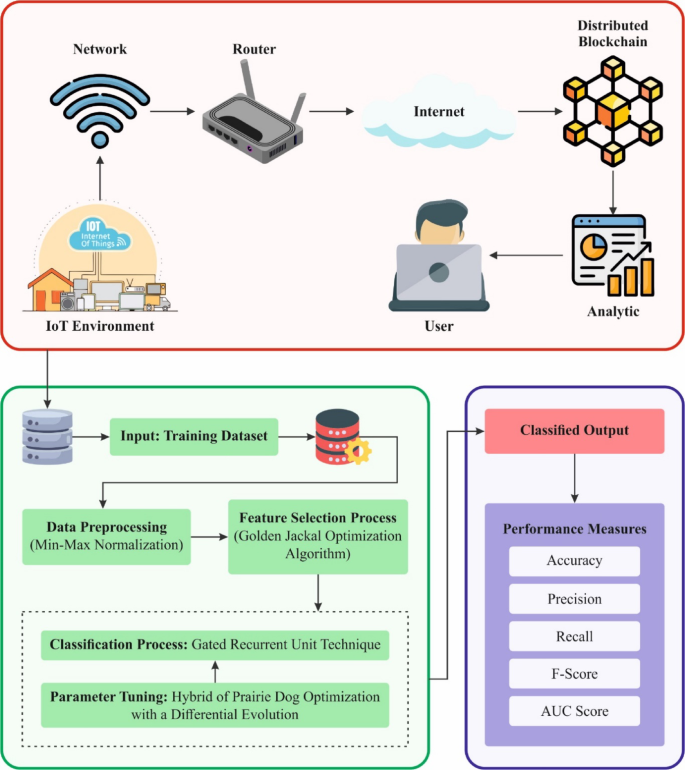

In this section, the feature selection process is implemented by employing the GJO model32. This model is chosen because it can effectively identify the most relevant features while minimizing the impact of irrelevant or redundant data. GJO is a nature-inspired optimization algorithm replicating the hunting behaviour of golden jackals, enabling it to effectively explore the feature space and select the optimal subset of features. Compared to other feature selection techniques like genetic algorithms (GAs) or particle swarm optimization (PSO), GJO demonstrates a superior balance between exploration and exploitation, resulting in more accurate feature selection. This results in improved model accuracy, mitigated computational complexity, and faster training times. Furthermore, the robustness of the GJO model in handling noisy or high-dimensional datasets makes it specifically appropriate for cyberattack detection, where the data can often be noisy and complex. GJO-based feature selection improves the model’s interpretability and generalization ability by mitigating dimensionality. Figure 2 illustrates the steps involved in the GJO methodology.

Steps involved in the GJO method.

GJO is an intelligent optimizer technique intended to imitate the cooperative hunting behaviour detected in female and male jackals. The golden jackal is generally a very intellectual animal, and its hunting behaviour displays cooperative and tactical features. The GJO contains robust global search ability (by pretending the tracking and predation behaviours of golden jackal, the technique holds robust global search ability and can efficiently evade dropping into a local optimal); GJO is generally capable of attaining fast convergence (which is capable of evading early convergence while upholding search efficacy owing to the exploration and development stages of a model); the method is very simple to unite with other techniques (GJO is joint with other models for generating hybrid optimizer models to enhance the complete performance); and can process multi‐objective optimization issues (in fact, numerous issues frequently have manifold optimizer aims, and GJO can manage multi‐objective optimizer issues to discover an appropriate solution amongst the many objectives that is particularly significant in real-world applications). The GJO technique contains three vital phases: attacking, surrounding, and searching the prey.

(1) Initialize: Initialization of population:

$${Y}_{0}={Y}_{\text{min}}+rand\cdot \left({Y}_{\text{max}}-{Y}_{\text{min}}\right)$$

(1)

Here, \({Y}_{0}\) signifies the early location. The randomly generated number \(rand\), which captures a value among \(\text{0,1}\) or any number, represents a randomly generated variable. The matrix form of \(Prey\) is signified below:

$$Prey = \left[ {\begin{array}{*{20}l} {Y_{1,1} } \hfill & \ldots \hfill & {Y_{1,n} } \hfill \\ \vdots \hfill & \ddots \hfill & \vdots \hfill \\ {Y_{m,1} } \hfill & \ldots \hfill & {Y_{m,n} } \hfill \\ \end{array} } \right]$$

(2)

While \(m\) signifies the number of praises, \(n\) is the size of the problem and \({Y}_{m,n}\) states the \(nth\)‐dimensional location of \(mth\) prey. The adaptation matrix for every prey is formulated below:

$$F_{OA} = \left[ {\begin{array}{*{20}l} {f(Y_{1,1} } \hfill & \ldots \hfill & {Y_{1,n} )} \hfill \\ \vdots \hfill & \ddots \hfill & \vdots \hfill \\ {f(Y_{m,1} } \hfill & \ldots \hfill & {Y_{m,n} )} \hfill \\ \end{array} } \right]$$

(3)

Here, \(f()\) denotes the fitness function (FF). The finest fitness value has been chosen as the male jackal, whereas the 2nd finest was elected as the female jackal.

(2) Search for prey:

The female jackal mostly obeys the male throughout the searching stage.

$${Y}_{1}(t)={Y}_{M}(t)-E\cdot \left|{Y}_{M}\left(t\right)-{r}_{1}\cdot Prey\left(t\right)\right|$$

(4)

$${Y}_{2}(t)={Y}_{FM}(t)-E\cdot \left|{Y}_{FM}\left(t\right)-{r}_{1}\cdot Prey\left(t\right)\right|$$

(5)

Here, \({Y}_{M}(t)\) and \({Y}_{FM}(t)\) mean the location of male and female jackals at the \(tth\) iteration, respectively; \(t\) denotes the present count of iteration; \(Prey (t)\) designates the location of the prey at \(tth\) iteration, \({Y}_{2}(t)\) and \({Y}_{1}(t)\) signifies the area of female and male jackal once upgraded after the \(tth\) iteration, respectively; \(E\) means the energy level of prey escape, which is formulated below:

$$E={E}_{1}\cdot {E}_{0}$$

(6)

$${E}_{0}=2\cdot r-1$$

(7)

$${E}_{1}={c}_{1}\cdot \left(1-\frac{t}{T}\right)$$

(8)

While \({E}_{0}\) means the early state of prey energy, \({E}_{1}\) signifies the descending procedure of prey energy, \(r\) denotes a randomly generated value among \(0\) and 1, and \({c}_{1}\) is set as 0.5. \(t\) indicates the present iteration count, while \(T\) indicates the maximum iteration count. \({r}_{1}\) refers to a randomly generated number from the Lévy distribution:

$${r}_{1}=0.5\cdot LF\left(y\right)$$

(9)

$$LF(y)=0.01\cdot \left(\mu \cdot \sigma \right)/\left(\left|{v}^{\left(1/\beta \right)}\right|\right)$$

(10)

$$\sigma =\left(\frac{\Gamma \left(1+\beta \right).\text{sin}\left(\frac{\pi \beta }{2}\right)}{\Gamma \left(\frac{1+\beta }{2}\right)\beta \cdot \left({2}^{\frac{\beta -1}{2}}\right)}\right)1/\beta$$

(11)

Here, \(v\) are randomly produced numbers among \((\text{0,1})\) and the \(\beta\) is fixed as 1.5.

Eventually, the upgraded formulation for the location where the golden jackal has been placed below:

$$Y(t+1)=\left(\left({Y}_{1}\left(t\right)+{Y}_{2}\left(t\right)\right)\right)/2$$

(12)

(3) Encircling and attacking prey

The encircling and attacking its prey, the golden jackal, was signified by using the mentioned equation:

$${Y}_{1}(t)={Y}_{M}(t)-E\cdot \left|{r}_{1}\cdot {Y}_{M}\left(t\right)-Prey\left(t\right)\right|$$

(13)

$${Y}_{2}(t)={Y}_{FM}(t)-E\cdot \left|{r}_{1}\cdot {Y}_{FM}\left(t\right)-Prey\left(t\right)\right|$$

(14)

Finally, upgrading the golden jackal position endures according to Eq. (12). The FF employed in the GJO technique is intended to have a balance among the quantity of chosen features in every solution (minimum). The classification accuracy (maximum) attained by employing these preferred features, Eq. (15), signifies the FF to assess solutions.

$$Fitness=\alpha {\gamma }_{R}\left(D\right)+\beta \frac{\left|R\right|}{\left|C\right|}$$

(15)

While \({\gamma }_{R}(D)\) signifies the classifier rate of error of an assumed classifier, \(\left|R\right|\) denotes the cardinality of the chosen subset, and \(|C|\) refers to the total number of features, \(\alpha\) and \(\beta\) are dual parameters equivalent to the significance of the classifier excellence and sub-set length ∈ [1,0] and \(\beta =1-\alpha .\)

Stage 3: GRU-based classification model

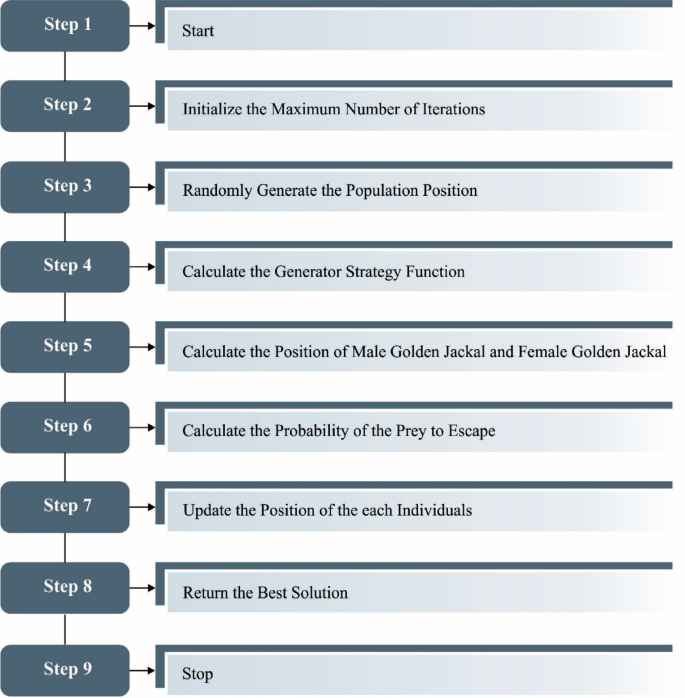

Furthermore, the proposed LBCCD-GJO model utilizes the GRU technique to classify cyberattacks33. This model is chosen due to its efficiency in capturing temporal dependencies in sequential data, which is significant for cyberattack detection. GRUs are a type of RNN that can model long-range dependencies while being computationally more effectual than conventional LSTMs due to their simpler architecture. GRUs are particularly appropriate for real-time applications, where speed and performance are critical. Compared to other classification techniques, such as feedforward neural networks or support vector machines (SVM), GRUs can process sequential patterns over time, making them more adept at detecting growing attack behaviours. The capability of the GRU model to handle variable-length input sequences and mitigate vanishing gradient problems additionally improves its robustness. This makes the GRU model ideal for handling dynamic and time-sensitive cyberattack data, resulting in improved accuracy and more reliable detection. The structure of the GRU model is depicted in Fig. 3.

The GRU is an RNN that is precisely the intended process to capture temporal relationships and sequential data. It presents gate methods on top of the basic RNN framework for concerns like explosion and gradient vanishing, which are usually faced in classical RNNs. The GRU method contains dual main modules: the memory cells and two gates, the reset and update gates. It is primary objectives are as follows:

-

Memory Cell: During the GRU method, it is dependable for passing and storing historical data. It acts as a hidden layer (HL), which is taken as input. At every time step, the prior time steps in HL are created, which creates the existing time step in the HL. A gate mechanism organizes the memory cell upgrades.

-

Update Gate: It handles present input and historical data affecting the memory cells. It intends to combine the present input with historical data to upgrade the memory cell. The update gate value ranges from zero to one, whereas zero means total disregard for the present input, and one means abundant retention of the existing input.

-

Reset Gate: It determines the significance of historical data when evaluating the present HL. It regulates data in the memory cell, which might be neglected to evade depending on previous data. The reset gate plays a main role in controlling several forgotten historical data.

The GRU method can more effectively handle the weight and flow of historical data, consequently modelling long-term needs in sequential data more effectively. Related to classical RNN methods, GRU methods are simpler to train and better for taking co-relations and patterns in sequential data. The equation of the GRU method:

$$\begin{aligned} & u_{t} = \sigma \left( {W_{u} y_{t} + V_{u} s_{t – 1} + c_{u} } \right), \\ & v_{t} = \sigma \left( {W_{v} y_{t} + V_{v} s_{t – 1} + c_{v} } \right), \\ & \hat{s}_{t} = {\text{tanh}}\left( {W_{s} y_{t} + V_{s} \left( {v_{t} \odot s_{t – 1} } \right) + c_{s} } \right), \\ & s_{t} = \left( {1 – u_{t} } \right) \odot s_{t – 1} + u_{t} \odot S_{{t^{\prime}}} \\ \end{aligned}$$

(16)

where

-

\(\sigma\): It represents the activation function, usually relating to the sigmoid function. It holds the input value range from zero to one. The activation function equation is

$$\sigma (x)=\frac{1}{1+{e}^{-x}}.$$

-

\(tanh\): It can signify a hyperbolic tangent function and activation function. It holds the input value range between -1 to 1. The hyperbolic tangent function equation is

$$\text{tanh }\left(x\right)=\frac{{e}^{x}-{e}^{-x}}{{e}^{x}+{e}^{-x}}.$$

-

\({W}_{u},{W}_{v},{W}_{s}\): It can employ the existing input \({y}_{t}\) in weight matrices.

-

\({V}_{u},{V}_{v},{V}_{s}\): Using the HL \({s}_{t-1}\) from the earlier time step in weight matrices.

-

\({c}_{u},{c}_{v},{c}_{s}\): It represents bias vectors related to diverse state or gate updates.

-

\({y}_{t}\): It demonstrates the existing time step of input vectors.

-

\({s}_{t-1}\): It denotes the earlier time step of the HL vector.

-

\(\odot\): Element-to-element multiplication Hadamard product, also called the dot product. It signifies the multiplication of elements at related locations of dual vectors of a similar dimension.

-

\((1-{u}_{t})\): The update aspect for the HL \({s}_{t}\) at the existing time step. It balances the earlier time step HL \({s}_{t-1}\) contribution and the present candidate HL \(\widehat{s}.\)

-

\(+\): The usual addition operator is adding vectors from element to element.

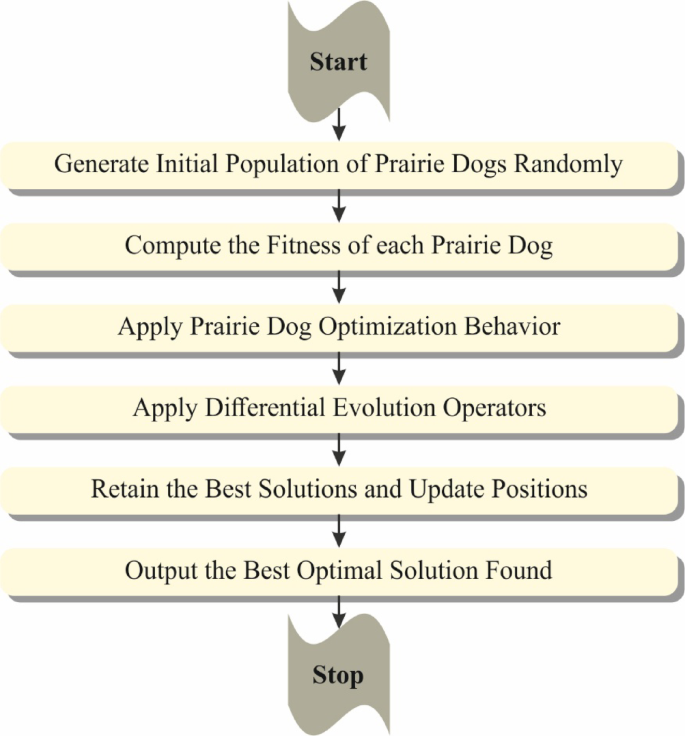

Stage 4: PDO-DE-based hyperparameter tuning model

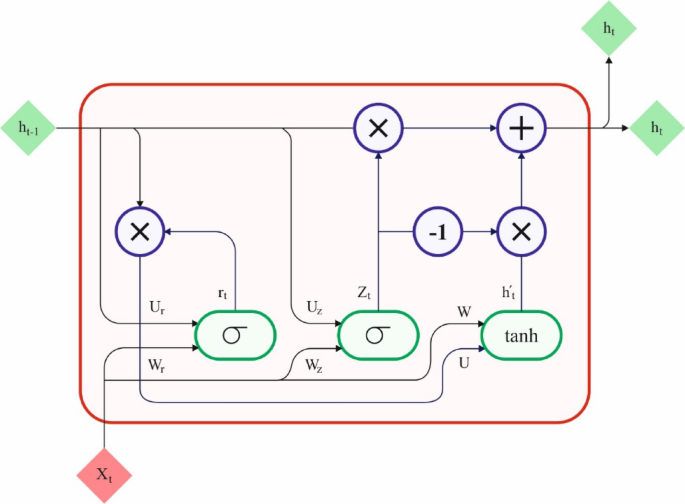

Eventually, the PDO-DE technique hybrid implements the GRU model’s hyperparameter selection34. This technique is chosen for its capability to effectually optimize the hyperparameters of the GRU model, improving performance by selecting the best combination of parameters. This hybrid approach integrates the merits of PDO-DE, utilizing PDO’s exploration capabilities and DE’s exploitation strengths to fine-tune hyperparameters. Unlike conventional grid or random search methods, PDO-DE is more adaptive and effective in navigating complex, high-dimensional search spaces. The hybridization improves its capability to find optimal solutions while avoiding local minima, resulting in superior model accuracy. Furthermore, the PDO-DE model presents faster convergence and needs fewer computational resources than other optimization techniques like GA or PSO. This makes it specifically appropriate for cyberattack detection, where rapid model training and high performance are crucial. By optimizing hyperparameters effectually, PDO-DE ensures that the GRU model operates at peak efficiency, improving detection results. Figure 4 specifies the steps involved in the PDO-DE model.

Steps involved in the PDO-DE method.

PDO technique is developed, which appeals to stimulation from the prairie dogs’ behaviour. This method defines the most beneficial solution for an exact optimizer issue. The activity of prairie dogs includes the creatures coming out of the tunnels, moving, and returning. The technique of PDO uses probable solutions to recognize the optimum solution for a definite issue. A vector represents the position of \(ith\) PDO in a specific cluster. Equation (17) briefly defines the depiction of the matrix that displays the locations of every coterie (COT) in the group.

$$COT=\left[\begin{array}{cccccc}COT\text{1,1}& COT\text{1,2}& \dots & \dots & \dots & COT1,d\\ COT\text{2,1}& COT\text{2,2}& \dots & \dots & \dots & COT2,d\\ \vdots & \ddots & \vdots & \vdots & \vdots & \vdots \\ \vdots & \vdots & \vdots & \ddots & \vdots & \vdots \\ COTs,1& COTs,2& \vdots & \vdots & \vdots & COTs,d\end{array}\right]$$

(17)

Here, \(C{T}_{i,j}\) represents the cluster’s jth area of \(ith\) coterie. Equation (18) demonstrates the spatial spread of every PDO in a coterie.

$$PD=\left[\begin{array}{cccccc}PD\text{1,1}& PD\text{1,2}& \dots & \dots & \dots & PD1,d\\ PD\text{2,1}& PD\text{2,2}& \dots & \dots & \dots & PD2,d\\ \vdots & \ddots & \vdots & \vdots & \vdots & \vdots \\ \vdots & \vdots & \vdots & \ddots & \vdots & \vdots \\ PDm,1& PDm,2& \vdots & \vdots & \vdots & PDm,d\end{array}\right]$$

(18)

\(P{D}_{i};j\) signifies the \(jth\) ratios of \(ith\) prairie dog in a cluster, whereas \(1\) and \(s\) are dissimilar numerals. The task of prairie dog and coterie positions is achieved utilizing a uniform distribution, as demonstrated in Eqs. (19) and (20).

$$CTi,j=U\left(\text{0,1}\right)\times \left(Uj-Lj\right)+LBj$$

(19)

$$PDi,j=U\left(\text{0,1}\right)\times \left(Uj-Lj\right)+Lj$$

(20)

Here, \(Uj\) and \(Lj\) signify the highest and lowest values of \(jth\) size. Uj represents the upper bound. The lower bound is symbolized as \(Lj\). The symbol \(U(O, 1)\) means a randomly generated variable evenly distributed among \(0\) and 1. PDO technique alters its method by interchanging between exploitation and exploration depending upon four requirements. The complete quantity of repetitions \((rep)\) is separated into four parts, where dual parts are assigned for exploration, and the leftover dual is devoted to exploitation. The exploration has been divided into binary models depending upon the restraints \(rep

Exploration phase

The growth of current burrows is liable to the capability of the selected food supply. Equation (21) signifies the procedure of upgrading locations throughout this stage.

$$\begin{aligned} PDOi + 1,j + 1 & = GPDOBest j,j – eCPDOBest i,j \times p – CPDO i, j \\ & \quad \times Levy\left( L \right)A rep < \frac{{ {\text{Max}}\,rep}}{4} \\ \end{aligned}$$

(21)

The second model includes evaluating the ability of previously met food resources and assessing the capability in tunnelling. Subsequent burrows are formed using this tunnelling capability, which reduces and increases the number of repetitions, limiting the number of tunnels that might be shaped. Equation (22) signifies altering the locations to build holes.

$$\begin{aligned} PDOi + 1,j + 1 & = PDOGBest j,j \times rPDO \times DS \\ & \quad \times Levy\left( L \right)\Lambda \frac{{{\text{ Max}}\,rep}}{4} \le rep \le \frac{{ {\text{Max}}\,rep}}{2} \\ \end{aligned}$$

(22)

The current most appropriate solution is signified as \(GPDOBesti\), \(j,\) and its efficiency is measured utilizing \(eCPDOBesti\); \(j\), as exposed in Eq. (23). The food resource caution is meant as \(q\) and contains a constant rate of 0.1 \(kHz\). The \(rPDO\) specifies the randomly generated solution’s location. At the same time, the united effect of every PDO is signified as \(CPDOi\), \(j\) as defined in Eq. (24). The burrowing flexibility of the group, denoted as \({D}_{S}\), lies on the food basis’s excellence and is nominated at random utilizing Eq. (25). The Levy distribution, signified as \(Leuy (L)\) was applied to enhance the issue space with larger efficacy.

$$e CPDOBesti, j= GPDOBesti, j\times \Delta +\frac{PDOi,j\times mean(PDOn,s)}{GPDOBest\times (Uj-Lj)+\Delta }$$

(23)

$$CPDOi, j=\frac{GPDOBesti,j-rPDOi,j}{GPDOBesti,j+\Delta }$$

(24)

$${D}_{S}=1.5\times r\times (1-\frac{rep}{\text{ Max }rep}{)}^{2}\frac{rep}{\text{ Max }rep}$$

(25)

Here, \(r\) presents randomness to enable the survey, alternating between \(-1\) and \(1\). \(D\) considers any variants between prairie dogs, while the execution assumes they are similar. \(rep\) signifies the present iteration, whereas \(Maxrep\) denotes the maximum amount of repetitions authorized.

Exploitation stage

PDO uses this method to direct a whole hunt in the latent regions exposed throughout the exploration phase. The dual methods are signified by Eqs. (26) and (27). PDO uses these dual tactics depending upon the restraints \(Maxrep/2\le rep<3Maxrep/4\) and \(3Maxrep/4\le rep\le\) Maxrep, as stated before.

$$\begin{aligned} PDOi + 1,j + 1 & = GPDOBest i,j – eC PDOBest i,j \times \varepsilon – CPDOi, j \\ & \quad \times rand \Lambda 3\frac{{ {\text{Max}}\,rep}}{4} \le rep < 3\frac{{ {\text{Max}}\,rep}}{4} \\ \end{aligned}$$

(26)

$$PDOi+1,j+1= GPDOBest i,j-PE\times rand \Lambda 3\frac{\text{ Max }rep}{4}\le rep

(27)

Here, \(GPDOBesti, j\) means the current finest solution exposed, while \(eCPDOBesti, j\) denotes the perfect solution’s effect. \(e\) signifies the excellence of food source, while \(CPDOi, j\) specifies the shared impact as specified in Eq. (26). In the mathematical Eq. (28), the impact of predator is signified as \(PE\), where \(rand\) represents the produced value at random which ranges from \(0\) to 1.

$$PE=1.5{\left(1-\frac{rep}{\text{Max}rep}\right)}^{2\frac{rep}{\text{ Max}rep}}$$

(28)

DEA is a strong, effectual search model that challenges complex, continuous, non-linear functions. The conventional DE method starts by setting an \(N\) population of individuals, which is signified by vector \(\overrightarrow{X}\), whereas \(\overrightarrow{X}=\left({X}_{i1},{X}_{i2}, {X}_{i3}, \dots ,{X}_{in}\right),i=\text{1,2},3,\dots ,N\), and \(n\) represent the dimension of the problem. The DEA has combined three main operators: crossover, selection, and mutation. The crossover and mutation operators are applied to generate new candidate vectors. Simultaneously, a selection method is employed to define the existence of children or parents in the later generation.

Mutation phase

As per Eq. (29), \({\overrightarrow{V}}_{i}=({u}_{i1}, {u}_{i2}, {u}_{i3},\dots , {u}_{in})\) is formed using a mutation operator. The \(DE/best/1^{\prime }\) is often used and defined below:

$${\overrightarrow{V}}_{i}\left(t\right)={\overrightarrow{X}}^{*}\left(t\right)+F\left({\overrightarrow{X}}_{\alpha }\left(t\right)-{\overrightarrow{V}}_{\beta }\left(t\right)\right)$$

(29)

Here, \(t\) signifies the present iteration, and \({\overrightarrow{X}}^{*}(t)\) denotes the finest individual with the final \(f\left({\overrightarrow{X}}^{*}\right)\). At present, \(\alpha\) and \(\beta\) are dual nominated indices at random from the interval of \([\)1, \(N]\), whereas \(a,\) \(b\), and \(i\) are dissimilar from each other \(\alpha \ne \beta \ne i\in 1,\dots ,N)\). Moreover, \(F\in [\text{0,1}]\) signifies a mutation scaling factor.

Crossover phase

The crossover parameter is employed on every modified individual and its related target individual. \(\overrightarrow{X}\) to yield a trial vector, \({\overrightarrow{U}}_{i}=\left({u}_{i1},{u}_{i2},{u}_{i3},\dots ,{u}_{i,n}\right)\). Binomial and Exponential crossovers are commonly used crossover tactics. The formulation of binomial crossover is formulated below:

$${u}_{i,j}\left(t\right)=\left\{\begin{array}{l}{v}_{i,j}\left(t\right), if\, {r}_{j}\le CR\, or\, j=R;\\ xi,j\left(t\right), Otherwise\end{array}\right.$$

(30)

Here, \(R\) signifies a randomly nominated size from \(1, 2,\)…,\(n\). The crossover rate \((CR)\) ranges from \(0\) to 1, and \({r}_{j}\in [\text{0,1}]\) is a randomly generated number evenly spread among \(0\) and 1.

Selection phase

The selection strategy helps variety equated to contest, fitness‐proportional, and rank‐based selection. The individual selection method functions by defining the existence amid the trial of the individual. \({\overrightarrow{U}}_{i}(t)\) and its target equivalent \({\overrightarrow{X}}_{i}(t)\). The mathematical equation for reduction problems is given in Eq. (31):

$${\overrightarrow{X}}_{i}\left(t+1\right)=\left\{\begin{array}{l}{\overrightarrow{U}}_{i}\left(t\right), if \,f\left({\overrightarrow{U}}_{i}\left(t\right)\right)\le f\left(\overrightarrow{X}\left(t\right)\right)\\ {\overrightarrow{X}}_{i}\left(t\right),\, otherwise\end{array}\right.$$

(31)

where \(f\) denotes an objective function.

The proposed method uses dual main techniques such as PDO and DEA. Tackling engineering design problems well requires discovering the maximum or nearly optimum solutions for intricate methods with many restraints. DEA is an ML technique that uses the most unfavourable and favourable solutions to generate new solutions. This approach efficiently tackles restraints linked with traditional optimizer models. PDO is a population‐based optimizer model that influences the behaviour patterns of prairie dogs to recognize the most effective solutions. The PDO‐DE technique begins by setting solutions and producing further solutions using the DE technique. The cooperation among DE and PDO outcomes in enhanced PDO performance model.

$${A}_{ij}=rand\times \left(UpB-LoB\right)+LoB,i=\text{1,2},\dots ,IP,j=\text{1,2},\dots , Z.$$

(32)

Here, \(Z\) represents the size of every parameter \({A}_{i}.\) \(LoB\) and \(UpB\) denote a lower and upper bound, correspondingly. A hybrid of DE and PDO techniques achieves the upgrade of agent \(A\). The parameter \(Ran{d}_{r}\in [\text{0,1}]\) is randomly generated to change amongst the PDO and DE mechanisms. Mainly, when \(Ran{d}_{r}<0.5\), the operator of PDO is used to alter the present solution, or when \(Ran{d}_{r}\ge 0.5\), the solution will be upgraded utilizing the algorithm of HHO. The formulation is stated as follows:

$${A}_{i}\left(t+1\right)=\left\{\begin{array}{l}Use\,PDO\,as\,in\,Eqs.\,(14){-}(17) , Ran{d}_{r}<0.5\\ Apply\,DE\, as\, in\, Eq.\,(20) , otherwise\end{array}\right.$$

(33)

Fitness selection is the major reason for manipulating the efficiency of the PDO-DE technique. The parameter selection procedure includes the solution-encoded system to calculate the effectiveness of the candidate solution. In this study, the PDO-DE model reflects precision as the foremost principle for projecting the FF, which is expressed below.

$$Fitness =\text{ max }\left(P\right)$$

(34)

$$P=\frac{TP}{TP+FP}$$

(35)

Here, \(TP\) denotes the value of true positive, and \(FP\) represents the value of false positive.

[ad_2]