[ad_1]

Anthropic’s Claude Code large language model has been abused by threat actors who used it in data extortion campaigns and to develop ransomware packages.

The company says that its tool has also been used in fraudulent North Korean IT worker schemes and to distribute lures for Contagious Interview campaigns, in Chinese APT campaigns, and by a Russian-speaking developer to create malware with advanced evasion capabilities.

AI-created ransomware

In another instance, tracked as ‘GTG-5004,’ a UK-based threat actor used Claude Code to develop and commercialize a ransomware-as-a-service (RaaS) operation.

The AI utility helped create all the required tools for the RaaS platform, implementing ChaCha20 stream cipher with RSA key management on the modular ransomware, shadow copy deletion, options for specific file targeting, and the ability to encrypt network shares.

On the evasion front, the ransomware loads via reflective DLL injection and features syscall invocation techniques, API hooking bypass, string obfuscation, and anti-debugging.

Anthropic says that the threat actor relied almost entirely on Claude to implement the most knowledge-demanding bits of the RaaS platform, noting that, without AI assistance, they would have most likely failed to produce a working ransomware.

“The most striking finding is the actor’s seemingly complete dependency on AI to develop functional malware,” reads the report.

“This operator does not appear capable of implementing encryption algorithms, anti-analysis techniques, or Windows internals manipulation without Claude’s assistance.”

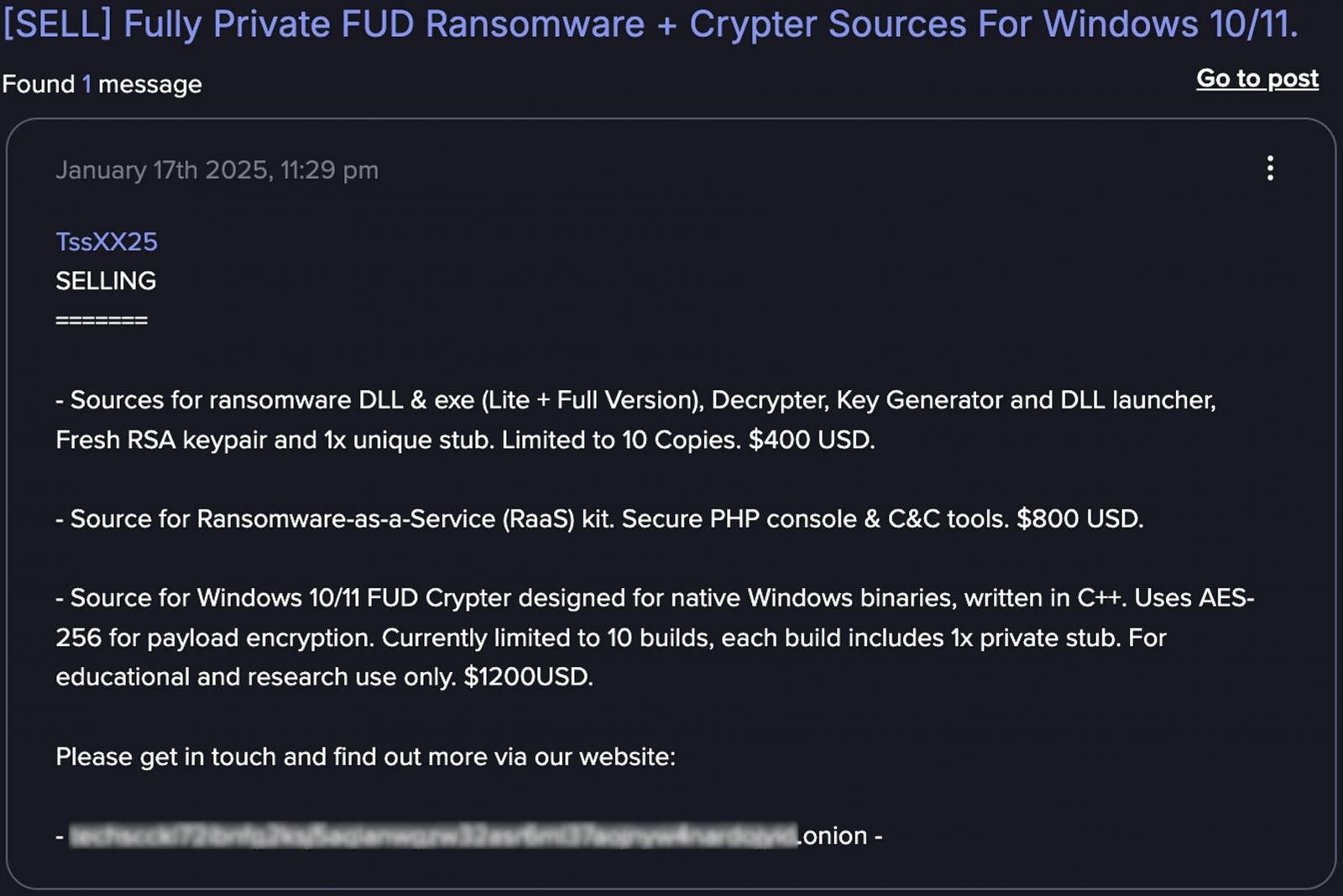

After creating the RaaS operation, the threat actor offered ransomware executables, kits with PHP consoles and command-and-control (C2) infrastructure, and Windows crypters for $400 to $1,200 on dark web forums such as Dread, CryptBB, and Nulled.

Source: Anthropic

AI-operated extortion campaign

In one of the analyzed cases, which Anthropic tracks as ‘GTG-2002,’ a cybercriminal used Claude as an active operator to conduct a data extortion campaign against at least 17 organizations in the government, healthcare, financial, and emergency services sectors.

The AI agent performed network reconnaissance and helped the threat actor achieve initial access, and then generated custom malware based on the Chisel tunneling tool to use for sensitive data exfiltration.

After the attack failed, Claude Code was used to make the malware hide itself better by providing techniques for string encryption, anti-debugging code, and filename masquerading.

Claude was subsequently used to analyze the stolen files to set the ransom demands, which ranged between $75,000 and $500,000, and even to generate custom HTML ransom notes for each victim.

“Claude not only performed ‘on-keyboard’ operations but also analyzed exfiltrated financial data to determine appropriate ransom amounts and generated visually alarming HTML ransom notes that were displayed on victim machines by embedding them into the boot process” – Anthropic.

Anthropic called this attack an example of “vibe hacking,” reflecting the use of AI coding agents as partners in cybercrime, rather than employing them outside the operation’s context.

Anthropic’s report includes additional examples where Claude Code was put to illegal use, albeit in less complex operations. The company says that its LLM assisted a threat actor in developing advanced API integration and resilience mechanisms for a carding service.

Another cybercriminal leveraged AI power for romance scams, generating “high emotional intelligence” replies, creaating images that improved profiles, and developingemotional manipulation content to target victims, as well as providing multi-language support for wider targeting.

For each of the presented cases, the AI developer provides tactics and techniques that could help other researchers uncover new cybercriminal activity or make a connection to a known illegal operation.

Anthropic has banned all accounts linked to the malicious operations it detected, built tailored classifiers to detect suspicious use patterns, and shared technical indicators with external partners to help defend against these cases of AI misuse.

[ad_2]

Source link