[ad_1]

Researchers have identified two mainstream large language models (LLMs) that were recently jailbroken by cybercriminals to help create phishing emails, generate malicious code and provide hacking tutorials.

One was posted on the dark web site BreachForums in February by an account named keanu and was powered by Grok — the AI tool created by Elon Musk’s xAI.

That tool “appears to be a wrapper on top of Grok and uses the system prompt to define its character and instruct it to bypass Grok’s guardrails to produce malicious content,” researchers from security firm Cato Networks said in a new report.

The other, which the researchers said was posted on BreachForums in October by an account named xzin0vich, is powered by Mixtral, an LLM created by French company Mistral AI.

Both of the “uncensored” LLMs were available for purchase by BreachForums users, the researchers said. Cybercriminals have continued to revive the site even though law enforcement agencies have repeatedly taken down versions of it.

Mistral AI and xAI did not respond to repeated requests for comment about the malicious repurposing of their products.

Vitaly Simonovich, threat intelligence researcher at Cato Networks, said the issues they discovered are not vulnerabilities with Grok or Mixtral. Instead, the cybercriminals are using system prompts to define the behavior of the LLMs.

“When a threat actor submits a prompt, it is added to the entire conversation, which includes the system prompt that describes the functionality of the … variants,” Simonovich said. Essentially, the cybercriminals are successfully pushing the LLMs to ignore their own rules.

Simonovich added that there is a growing number of uncensored LLMs as well as “entire ecosystems” built on open-source LLMs with tailored system prompts.

“This development provides threat actors with access to powerful AI tools to enhance their cybercriminal operations,” he explained.

Solutions to the trend are difficult considering Mixtral is an open-source model that allows hackers to host it on their own and provide API access. Malicious tools built on Grok, which runs as a public API managed by xAI, may be easier to stop.

“They could theoretically identify these system prompts, potentially shutting off access and revoking API keys. However, this process can be a cat-and-mouse game,” Simonovich told Recorded Future News.

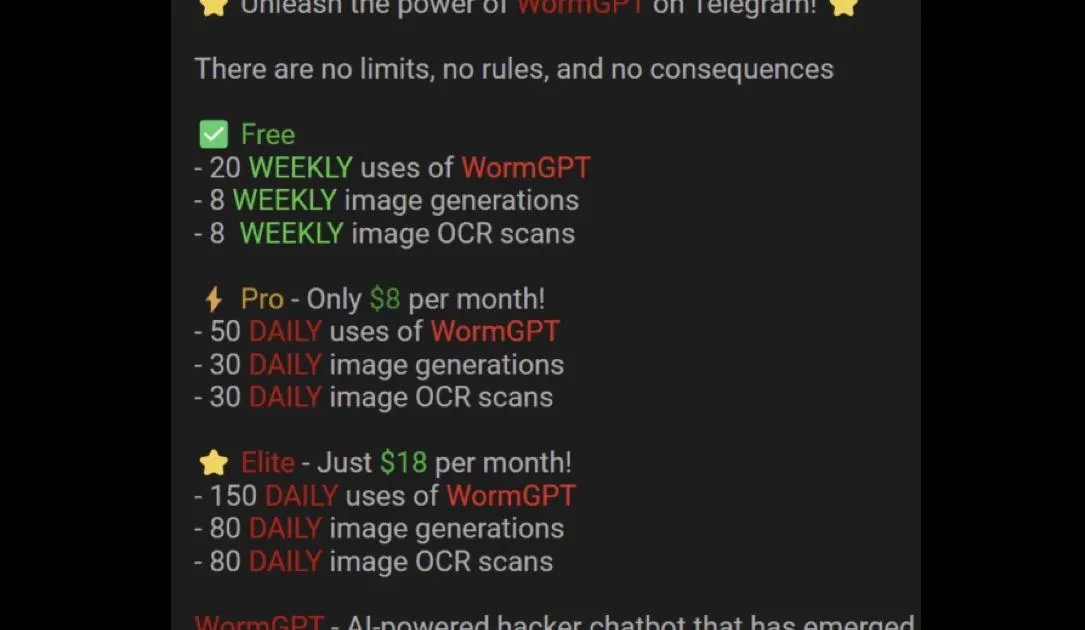

WormGPTs

Many of the uncensored LLMs you’ll find on cybercriminal forums are sold as WormGPT — named after one of the first generative AI tools that helped threat actors with a variety of tasks starting in June 2023.

The tool, powered by an open-source LLM created by EleutherAI, garnered significant media attention within weeks of its release and the creator was outed by cybersecurity reporter Brian Krebs before it was shut down.

But since then, multiple new versions also named WormGPT or called FraudGPT and EvilGPT have emerged on cybercriminal forums. The creators typically use a pricing structure ranging from €60 to €100 ($70 to $127) monthly or €550 (about $637) per year. Some offer private setups for €5,000 (about $5,790).

The Cato researchers said there is some evidence showing threat actors are recruiting AI experts to create custom uncensored LLMs.

They added that their research “shows these new iterations of WormGPT are not bespoke models built from the ground up, but rather the result of threat actors skillfully adapting existing LLMs.”

Apollo Information Systems’ Dave Tyson said the Cato report is only scratching the surface, warning that there are hundreds of uncensored LLMs on the dark web, including several built around other popular models like DeepSeek.

Tyson noted that the core tactic used to jailbreak AI is getting it to break its boundaries.

“Some of the simplest and most observed means to do this is by using a construct of historical research to hide nefarious activity; using the right paraphrasing to social engineer AI; or just leveraging an exploit of it,” he said.

“All of this discussion misses the USE of the models. Criminals are accelerating understanding and targeting, getting them faster to the decision to attack and pinpointing the right way to attack.”

The report comes one week after OpenAI released its own report about the way nation-states are misusing its flagship ChatGPT product. Russia, China, Iran, North Korea and other governments are repurposing it to write malware, mass create disinformation and learn about potential targets, the report said.

Several experts said Cato’s research and their own experience have shown that LLM guardrails are not sufficient in stopping threat actors from skirting safeguards and evading censorship efforts.

Darktrace’s director of AI strategy, Margaret Cunningham, said the company is seeing an emerging jailbreak-as-a-service market, which would “significantly lower the barrier to entry for threat actors, allowing them to leverage these tools without needing the technical skills to develop them themselves.”

On Monday, researchers at Spanish company NeuralTrust unveiled a report about Echo Chamber — a technique they said successfully jailbroke leading large language models with a 90% success rate.

“This discovery proves AI safety isn’t just about filtering bad words,” said Joan Vendrell, co-founder and CEO at NeuralTrust. “It’s about understanding and securing the model’s entire reasoning process over time.”

[ad_2]

Source link

Click Here For The Original Source.